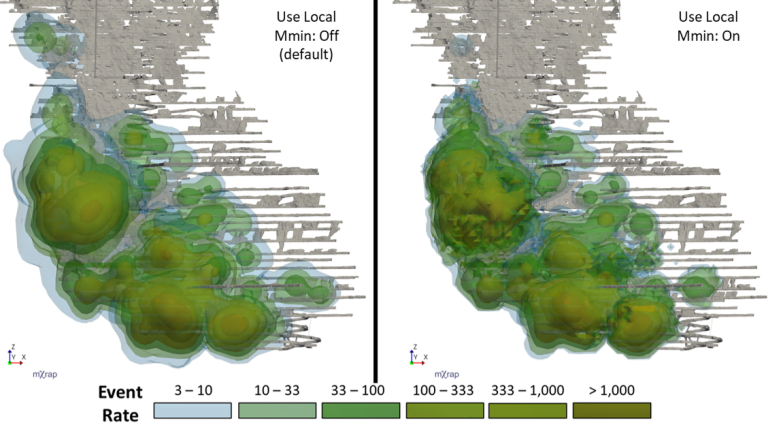

Hazard Assessment – Event Rate

The Hazard Assessment application uses a grid-based approach to describe the seismic hazard throughout your mine. Each grid point essentially represents a seismic source with a specific frequency-magnitude relationship. A frequency-magnitude relationship is defined from the MUL, Mmin, b-value, and event rate. The event rate is something we haven’t taken a dive into yet, so we’ll get into it in this post. Event rate sounds like a simple calculation but there are quite a few complexities worth explaining.